Customer-Driven Testing

Customers can and should strongly influence your testing strategy & test plans. This post described Customer-Driven Testing, which is part of the Customer-Driven Quality series, focused on the testing phase of the life-cycle.

Analytics Driven Test Plans

Your product probably has some type of usage analytics, tracking the features used. For web applications, the user experience design team most likely is tracking user flow through the application. Your product may have a “user audit log” or some way to track usage of the program. Your test teams should learn how usage is tracked, and use that data to improve your test plan.

One example where a team found great insights to help with testing in the usage data happened in the telecommunications industry. I was leading the test team for a network management system. This system had about 150 different features. The users were customer support agents, either diagnosing their customer’s network, or provisioning new service. The user activity log tracked usage for the purposes of auditing the quality of the customer support agents.

This system was deployed in a secure data center. We asked our customers for a dump of the user activity log, for a 30-day period. We aggregated and analyzed the logs to learn the relative usage of each feature. (Microsoft Excel Pivot Tables is your friend for this type of analysis)

We learned that 92% of all the usage of our product happened in 3 of the features. This was very interesting and illuminating. The top 2% of features accounted for 92% of the usage. We made sure that our testing for these 3 features was very thorough, and automated.

The usage data was also very useful to inform our risk-based testing strategy.

Rolling Deployment

When testing is complete, and the product is ready to deploy to customers, you may want to control the speed of that deployment to limit the risk that any bugs escaped your testing.

In the first day after fully deploying our application, we see several orders of magnitude more product usage than in the entirety of our testing cycle. To make sure we have a smooth deployment, we deploy in stages. This helps us ensure we didn’t miss anything in our development process.

We deploy to approximately 10% of our customer base the first evening. Then, we monitor the feedback closely from these customers, looking for any new or unique issues being reported by these customers. The balance of customers receive the new release a couple of days later. This process gives us the chance to correct any bugs that were missed by the test team.

We’ve also been able to use a rolling deployment for software that is distributed, and deployed by customers. We can throttle the distribution to gain the same impact. One way to control distribution is to post your software on the server, but control notification to your customers.

Performance Testing in the Real World

The majority of our performance and scalability testing happens in the lab. Our test environment is built to allow repeatable tests, with diagnostic tools, and has features to help with the test team productivity. These characteristics help with testing, but the real world is messier.

Performance and scalability testing should be supplemented and calibrated by using a remote testing service that more closely represents the customer experience. The remote testing service that we use executes test scripts from many locations across the world, which provides information on how our application is running from our customer’s perspective, not just from inside our firewall.

If an external service provider is beyond your budget, you can use free cloud computing services and deploy your test scripts in the cloud.

Test with customer data

Many applications need to use test data. Often, the customer’s data is not as “clean” as our test data. Customers add and delete records over time. They upgrade from version to version, and migrate from one computer to another. Over time, these activities can add complexity to the database structure like dangling pointers.

If possible, legal, and ethical, try to use actual customer data in your tests. You will need to make sure the use is legal through the End User License Agreement. Going beyond legal, you should also make sure its ethical to use customer data. I find two key principles in using customer data: explicit permission and a fail-safe way of protecting customer data.

Explicitly asking permission is a good practice, even if use is allowed by the license agreement. Out of the hundreds of people that I know personally, only one actually reads those agreements, and she is a lawyer. Don’t assume your customer knows the terms of the license agreement.

In using customer data, I’d also recommend putting extra controls in protecting their data & privacy. These controls should positively protect their data in a fail-safe manner. Practices like password protection, keeping data behind the firewall, and physical security are good, but its still possible for the data to leak out. Obfuscating private data provides an assured protection of their data, and may not impact your ability to test.

Obfuscating private data can be accomplished by a script or program to substitute text, replacing actual data with random or scrambled representations. Your program should preserve the structure of the data, but change the contents. For example, email addresses, phone numbers, names, and addresses have structure that should be preserved. (for example, account@host.tld)

Depending on your application, your tool may need to be smart enough to keep data coherent. For example, an application that I worked on did a coherence check on addresses, making sure the city & state matched the zip code (postal code).

When is testing finished?

One question that comes up very often in software testing, when are we finished testing? The answer is sometimes based on the exit criteria being met, or the project running out of time. The customer-driven testing way of determining completion is when the customer says so.

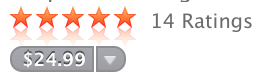

If your team is tracking customer feedback, perhaps with a 1-5 star rating system, you can track this feedback and declare the project only “done” when the customer rating is 4-stars or better. The development and test team would stay intact until customers are satisfied with the results.

Alpha and Beta Test

Alpha and Beta tests are the classic software development practices for including customers into your testing program. The test team should be active participants in these programs, and monitor the effectiveness.

See more customer-driven practices or continue to see how to improve your testing after release to customers.