By far, the most popular mobile game this year has been Pokémon Go. As you would expect, with a release of this magnitude, there have been some glitches. These issues, and the reaction from the player base, illustrates two core tenants of software quality for SaaS offerings:

- Availability is vital, the most important thing to get right in SaaS

- Regression bugs, which remove functionality, are especially painful for customers

Pokémon Go probably doesn’t require an overview, but just in case… Pokémon Go is a mobile, augmented reality game where players travel the real world to find creatures (called Pokémon), resources to help play the game (at Pokéstops), and places to compete with other player’s Pokémon (Gyms). Players are called trainers, and the objective is to train the most powerful Pokémon and to collect all of the different types.

Niantic, the company behind Pokémon Go, has rolled-out Pokémon Go in many countries very quickly. In terms of popularity, the launch was extremely successful. In the US, Pokémon Go was released on July 6th, and by July 11th, the number of daily active users surpassed Twitter. Take another look at those dates: it took only 5 days to go from 0 to Twitter scale.

Availability is the most important feature

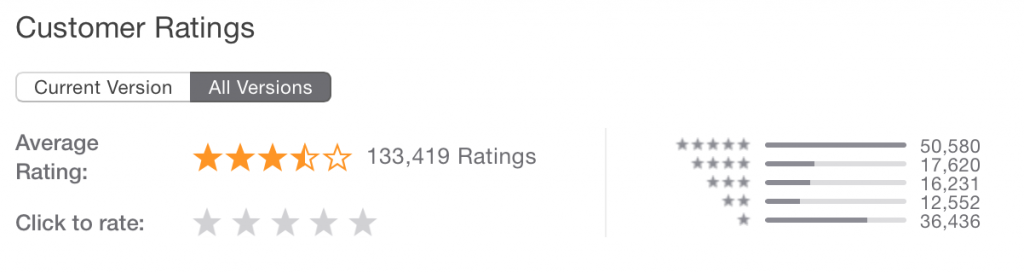

They were not prepared for that load and the servers showed it. Customers responded in kind with reviews and very public complaints:

Even 5-star reviews complain about availability:

Once the servers seemed stable in the US, Niantic released Pokémon Go in new countries, only to bring the instability back for existing users. As usual in these times, there were even new Twitter accounts created to reflect the frustration:

Players were frustrated because many of the resources in the game have timers associated with them. The player would spend on a resource, only to have the servers go offline.

Bugs are bad, regression bugs even worse

Many players are also complaining about the Nearby Pokémon feature that was available in the first launch. This feature gave players an indication on how close a Pokémon is to their current location, so they could hunt the Pokémon down. This was represented by the number of footprints next to the image, the fewer the footprints, the closer the creature:

One of the updates introduced a bug, which caused all of the nearby Pokémon to be shown with 3 footprints, regardless of actual distance:

In the latest update, Niantic removed the footprints. I’m assuming they followed a solid software principle that “its better to show no information rather than false information”, however the players were livid. Players, rightly, were frustrated that a feature was removed from the app.

In hindsight, the players would have had a better experience if the footprint tracker was not included in the early launch, and instead added later when all of the glitches were fixed. This feature was a relatively small part of the game, but removing it intensifies the player’s reaction. Regression bugs that remove functionality are very painful in SaaS offerings.

Overall, Pokémon Go is so far a great success, and my guess is that Niantic will fix these issues. And once they are fixed, they will be forgotten. The players are very passionate about the game and they will continue to play. But, it will take some time for the players to forget, and the app store ratings will remain.

The popularity of Pokémon Go will allow Niantic to survive these glitches, but your app might not have as much of a passionate customer base. For any other app/game, how many of the players would have deleted it and never returned?